Quick Backstory

I’ve dabbled with big data before—ran some batch jobs, cleaned up log files, maybe processed a CSV with a few million rows. But nothing at real “big data” scale. You know, the kind where your laptop fans start screaming and Apache Spark is the only way out.

So when a buddy asked if I could help process hundreds of gigabytes of logs per day, I figured this was my chance to finally dive deep into Apache Spark.

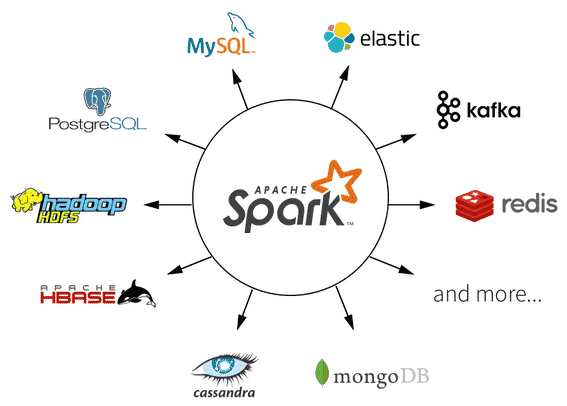

Why Apache Spark (and Why Scala)?

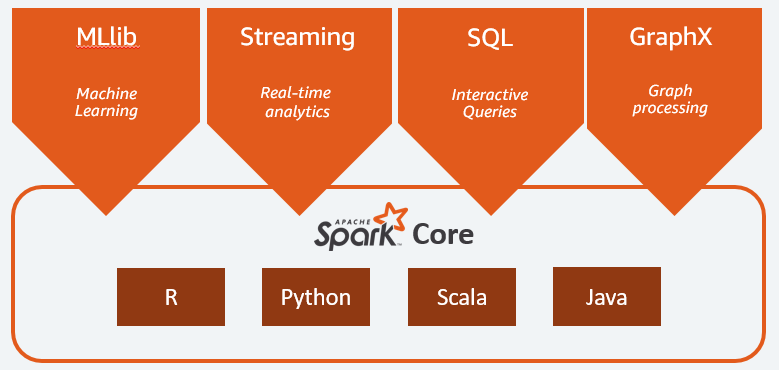

Sure, I could’ve used PySpark. But I wanted to try Spark in its native tongue—Scala. It’s one of those languages I respected from afar but never got hands-on with.

Turns out:

- Scala is expressive, a little intense at first, but great once it clicks.

- Spark just makes sense in Scala. Native APIs, tighter integration, better performance.

Also: Spark handles distributed computing like a champ. You focus on transformations—Spark takes care of the dirty cluster work.

Getting Set Up my Apache

Here’s what tripped me up during setup:

- Wrong Java version (Spark is picky)

- Misconfigured

spark-defaults.conf(cost me hours) - Permissions errors on HDFS (classic facepalm)

Once configured though, submitting and testing jobs felt smooth.

What the Job Did

Here was the task:

- Ingest daily server logs (text files up to 50GB each)

- Parse timestamps, error codes, IPs, etc.

- Group and aggregate by error type and time window

- Output analytics for reporting

Doing this in plain Python? Impossible. In Spark? Fast and clean—once I got the logic right.

Stuff I Liked

Lazy Evaluation

Spark doesn’t execute transformations until you run an action like .collect() or .saveAsTextFile()—super handy for debugging.

RDDs and DataFrames

RDDs give you low-level control. DataFrames are perfect for SQL-style operations. Both are awesome once you understand the difference.

Seamless Cluster Scaling

Ran a job locally: 20 minutes. Ran it on a 4-node cluster: 3 minutes. No code changes required.

Things That Sucked

Scala’s Type System

Not bad once you’re in the groove, but it’s no JavaScript. You’ll battle with types for a bit.

Vague Error Messages

Especially for executor crashes. A single null value can hide inside 500 lines of logs.

Config Tuning is Black Magic

Executor memory, parallelism, shuffle partitions… it takes a lot of trial, error, and mild cursing.

3 Random Things I Learned for Apache Spark

- You can run Spark SQL directly on Data Frames. Great for ad hoc querying.

- Caching Data Frames saves time when reused.

- Watching the Spark UI is weirdly satisfying—those little green boxes lighting up stage by stage.

Would I Use It Again?

Absolutely.

- For quick jobs? PySpark’s probably easier.

- For production-grade, heavy-duty ETL? Scala + Spark is powerful.

Yes, you’ll hit walls. A cluster node will die. Configs will break. But once it clicks? You’re flying.

Final Thought

If you’re tired of pretending you understand big data tools during meetings—just start playing with Spark.

- Grab a few large files

- Set up a local Spark instance

- Write some Scala

- Break things. Fix them. Repeat.

It’ll feel frustrating at first. But when your first distributed job finishes in seconds instead of hours, you’ll feel like a wizard.

Read more posts:- Build a PWA with React: 2025 Guide to Offline-Ready Apps