First Time I Met NiFi

So, what is Apache NiFi?

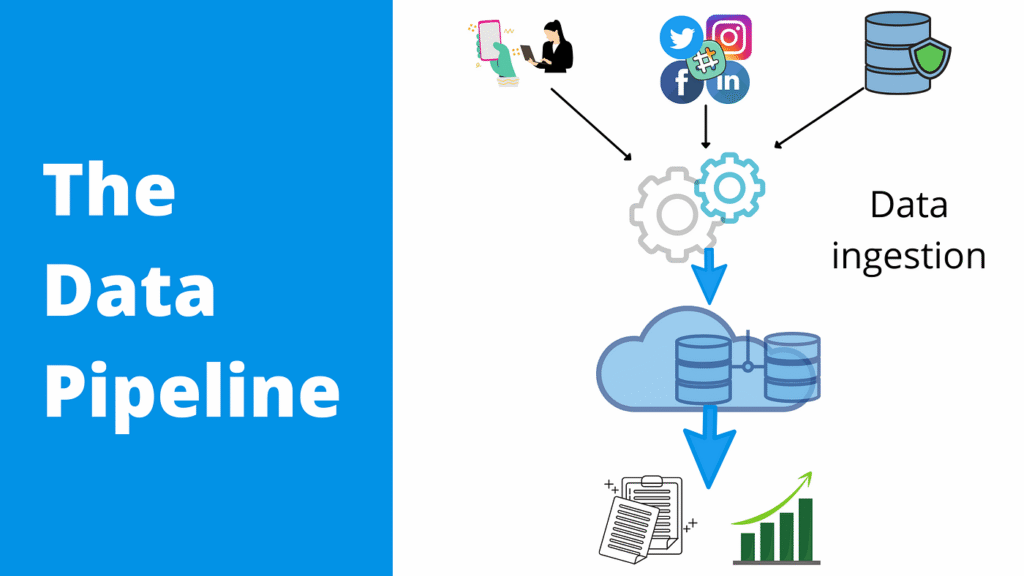

In my totally non-technical, half-caffeinated words: NiFi is like a digital conveyor belt for your data. It moves stuff from place to place, tweaks it if needed, and does it all through a visual drag-and-drop interface. Think of it more like wiring a circuit board than writing code.

You don’t write traditional programs in NiFi. You build data pipelines with boxes (called processors) and lines (called connections). Each processor does something small—read from a source, split a file, convert formats, send to a target system.

It’s visual, intuitive, and honestly… kind of addictive.

The Day I Ditched My Bash Scripts

I’ll never forget the day I replaced my home-grown ingestion hackathon—AKA spaghetti shell scripts and Python band-aids—with a clean NiFi pipeline.

Here was my setup to Nifi:

- A folder on an SFTP server received 10+ CSVs per day from multiple vendors.

- Each file had a slightly different format.

- My task: Grab the files, normalize formats, enrich with metadata, and load into a database.

What used to be a fragile 300-line mess became a beautiful NiFi canvas with 10 processors and 3 clean routes. I could watch data flow in real time, see failures instantly, and fix issues without touching logs or servers.

Honestly, it felt like leveling up.

Why NiFi Just Works

Here’s why I think NiFi is a game-changer for anyone handling data:

- It’s visual. You build with your mouse. You can explain the flow to your PM in a live meeting—and they’ll actually understand it.

- It handles back pressure. Got a data surge? NiFi slows the flow without crashing.

- It’s modular. Swap out a processor, update a config—no redeploys needed.

- It connects to everything. Kafka, S3, HDFS, FTP, MongoDB, SQL, REST APIs—you name it.

Bonus? It’s open source. No license drama. Just download and go.

One Thing I Love: FlowFile Attributes

This might sound nerdy—but FlowFile Attributes are a hidden gem.

Each piece of data in NiFi carries metadata with it—file names, timestamps, source paths, even custom tags you add during flow. You can route and filter data dynamically based on these attributes.

Want to process Vendor A’s files differently from Vendor B’s? Easy. Route by filename, type, or even internal tag. It’s flexible. And it puts you in control.

Stuff That Tripped Me Up (At First)

Not gonna lie—there’s a learning curve. My early stumbles included:

- Terminology confusion. Processors, FlowFiles, relationships—it all felt like Lego from different sets.

- No automatic error routing. You have to wire failure paths manually or risk silent data drops.

- Opinionated workflows. NiFi doesn’t always work your way. You’ll have to adapt.

But once it clicks, it just… works. Like a reliable coffee maker: press the button, walk away, trust it to do its job.

Real-World Apache Nifi Example: Logs, Logs, Logs

We had logs from multiple environments that needed to be shipped into a central Elasticsearch cluster. The old way? Everyone used different tools—Logstash, homegrown agents, duct tape.

It was chaos.

So I built a NiFi flow that:

- Pulled logs from various endpoints

- Parsed them into a consistent format

- Added metadata tags

- Shipped them to Elasticsearch

Best part? I didn’t touch the source services. No agents. No downtime. Just NiFi in the middle doing its thing.

Ops loved it. I looked like a hero.

What It’s NOT Good At

Let’s keep it real—NiFi isn’t perfect. Here’s where it struggles:

- It’s not a data warehouse. Don’t use it for storage or querying.

- Complex logic can turn into spaghetti. Break up large flows or rethink the design.

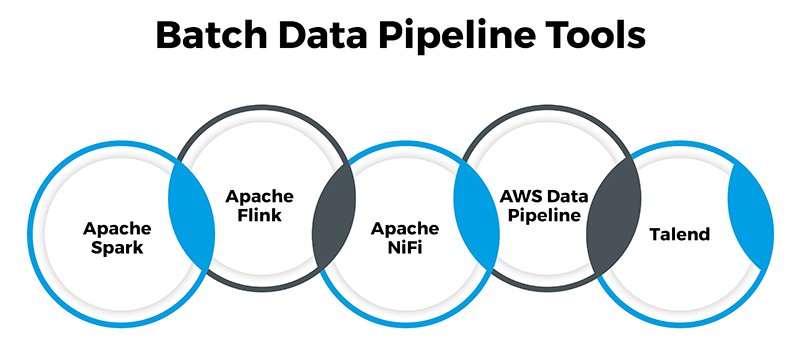

- Heavy transformations? Use Spark or dbt. NiFi is better for flow than for crunching.

Still, for ingestion and routing? NiFi is a rockstar.

Final Thoughts from a Former Data Janitor

If I had to summarize Apache NiFi in one line:

“It gives you peace of mind about your data pipelines.”

No more silent failures. No more debugging shell scripts at 2 a.m. Just clean, visible, auditable flows that make your job easier—and your data cleaner.

If you touch data—even occasionally—NiFi is worth exploring. You won’t want to go back.

Read more post:-Apache Spark for Big Data in Scala