If you had asked me back in 2021 whether I’d ever enjoy working with Apache Kafka, I would’ve rolled my eyes. Another distributed system to babysit? No, thanks.

But fast forward to 2025: I’m sitting here with cold coffee and a heat-blasting laptop, genuinely appreciating how Apache Kafka, paired with Node.js, has become one of my go-to combos for building real-time, event-driven systems.

This post isn’t a shiny “10 Reasons to Love Kafka” list. It’s a reflection. A walkthrough. A hands-on dev’s tech diary.

Event-Driven Mindset in 2025

It’s 2025. The demand for real-time, reactive applications is stronger than ever:

- Users expect live notifications, AI recommendations, and dynamic dashboards.

- Traditional batch jobs? Almost obsolete.

- Responsiveness across microservices is no longer a luxury—it’s expected.

That’s where event-driven architecture shines.

Instead of blocking operations with request/response, services react to events. Things flow naturally:

- Button click? Event.

- Payment success? Event.

- Sensor update? Yep—another event.

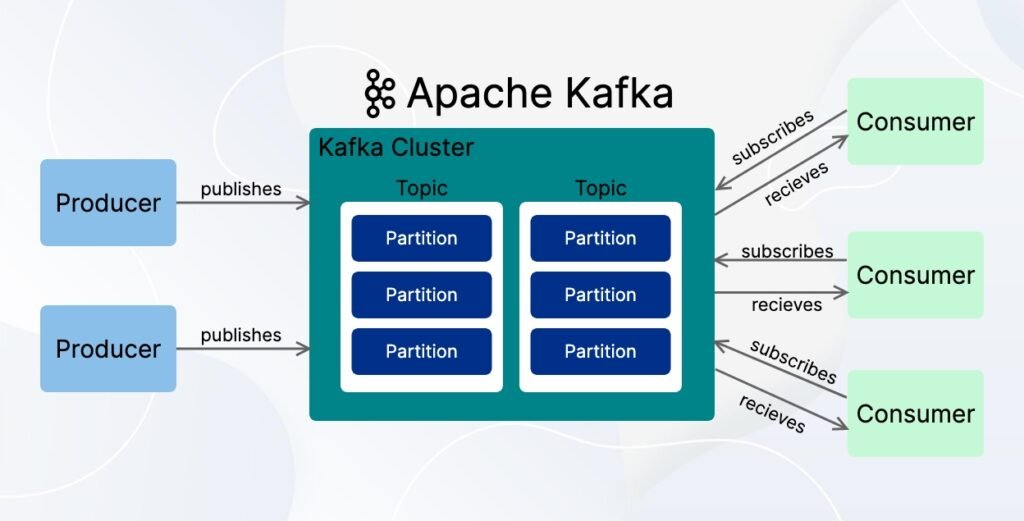

But to handle millions of events per day, you need a message broker that’s tough enough. Kafka is that broker. Reliable, scalable, and built for chaos.

Kafka Feels Intimidating at First

I’ll admit it—Kafka scared me. The lingo (topics, brokers, offsets) felt like decoding ancient scrolls. And don’t even get me started on ZooKeeper (which is finally becoming optional now).

But once I grasped the fundamentals, I saw it clearly:

Kafka is a distributed commit log. Services publish messages, Kafka stores them durably, and consumers read them at their own pace.

That’s what makes it beautiful: Decoupling. My Node.js microservice doesn’t care if the email service is down. Kafka holds the event. Everyone catches up when they can.

So… Why Node.js?

Because Node.js is fast—not just at runtime, but during development.

- Familiar async model

- Lightweight for microservices

- Ideal for reacting to Kafka events

One of my favorite Kafka+Node.js projects involved an e-commerce platform where we:

- Tracked user activity in real-time

- Broadcast inventory updates across services

- Triggered personalized emails based on behavior

Each service had its own role. Kafka was the brainstem, and Node.js the limbs reacting instantly.

What I Wish I Knew Earlier About Kafka

Let me save you some pain. Here are a few lessons I learned the hard way:

- Partitioning Matters:

Plan for it early. Poor partition strategy = poor scalability. - Use a Schema Registry:

Your event structure will evolve. Without schema versioning? Nightmare. - Exactly-Once Semantics Exist:

Yes, Kafka now supports it (properly). Configure it right, especially for payments or sensitive flows. - Don’t Overengineer:

Keep things modular—but avoid “events triggering events triggering events.” That way lies madness.

Real-World Kafka Wins

A few personal highlights:

Notification System Rebuild:

Old email triggers were flaky and late.

New Kafka-backed setup? Real-time emails, better user engagement, fewer support tickets.

Fraud Detection:

Every transaction flowed through Kafka. Downstream services flagged anomalies within seconds.

Speed + flexibility = magic.

But It’s Not All Roses

Kafka in 2025 still has quirks:

- Misconfigured consumers can fall behind without warning.

- Storage costs grow fast if you forget retention limits.

- Debugging? Still tough. Reading Kafka logs is like fortune-telling sometimes.

- Monitoring adds overhead (Prometheus, Grafana, Kafka Manager, Confluent UI… all helpful but complex).

And while Kafka works well with Node.js, Java integrations still have a slight edge in performance and features.

Why I’ll Still Recommend Kafka

If you’re building event-driven systems in 2025, I highly recommend Kafka.

- It’s reliable.

- It’s battle-tested.

- It scales beautifully with tools like Node.js.

Sure, it’s not the right fit for every app. But when you have multiple services reacting to high-frequency events, Kafka becomes your best friend.

Final Thoughts: Kafka as a Mindset

For me, Kafka isn’t just a message broker anymore—it’s a mindset shift.

You stop thinking about rigid workflows. Instead, you think in terms of events. What happened? What should react?

Kafka taught me to build systems that are more flexible, scalable, and resilient—not just “faster.”

It’s not perfect. You’ll fumble. But once you feel that first real-time flow click into place, you’ll understand why Kafka is more than just trendy—it’s practical.

Read more posts:-Using Apache NiFi for Data Ingestion Pipelines