Why Code Security Needs More Than Just Rules

Writing secure code with CodeQL? It’s not most developers’ favorite part of the job, but it’s becoming essential as vulnerabilities slip into production. Everyone wants to ship fast, test just enough, and move on. But as more half-baked apps go live, security bugs creep in—quietly, dangerously.

Having a solid code security scanner isn’t just helpful anymore. It’s essential.

This post isn’t a lecture on best practices. It’s more of a behind-the-scenes look at building a tool that combines AI, Python, and CodeQL to make code analysis smarter and less annoying.

What Makes CodeQL Different?

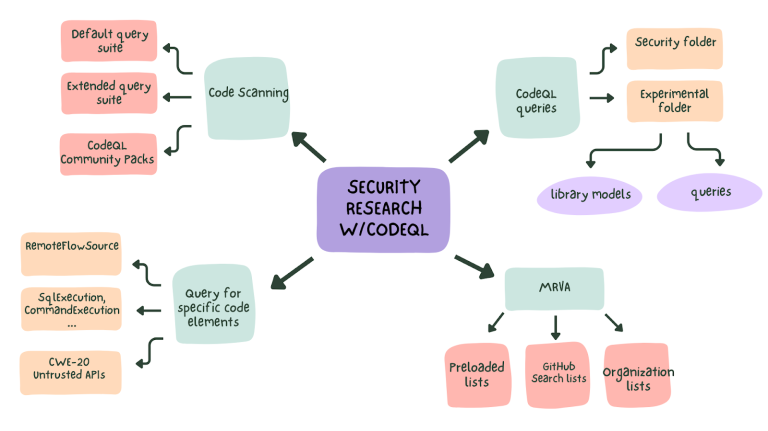

If you’ve never used CodeQL, here’s the short version: it lets you query your codebase like a database. You write custom queries (yeah, kind of like SQL) to find vulnerabilities, trace data flows, and spot unsafe patterns.

It’s more powerful than rule-based scanners because it answers questions like:

- Where does user input hit unsafe functions?

- Which parts write files without validation?

- Who’s touching sensitive APIs without checks?

In large or legacy codebases, this is gold. Instead of digging manually, CodeQL gives you high-level insight in one sweep.

Why Bring in AI?

Traditional static analysis tools generate too much noise. Most of it’s false positives.

AI, on the other hand, learns from real-world bugs. It recognizes patterns that don’t just “match a rule,” but actually feel risky based on past examples.

So I built a small Python tool that:

- Parses repositories,

- Pulls out function trees and import structures,

- Runs CodeQL queries to find vulnerabilities.

Example:

In one project, it flagged an exposed AWS token buried in a comment. Most rule-based tools missed it. The AI-powered scanner picked it up based on previous patterns. That’s the kind of signal that matters.

How the Setup Works

Here’s the simple pipeline I hacked together:

- Fetch the Codebase: CLI wrapper scans local repos or GitHub projects.

- Run CodeQL Queries: Preloaded queries for Python-specific vulnerabilities—like SQL injection, unsafe file writes, or insecure crypto.

- Generate Reports: Clean HTML output, grouped by severity, with links to relevant code lines.

Each scan took around 4–7 minutes depending on repo size. Not instant, but a lot better than waiting for post-deploy security reviews.

Things That Didn’t Go as Planned

It wasn’t all smooth sailing. Some flops worth sharing:

- Too many queries = sluggish scans and tons of noise.

- AI-only logic bug detection was hit-or-miss. Context is hard.

- Auto-fixing issues? Big mistake. One false positive broke a login flow.

Lesson learned:

Flag issues.

Don’t auto-fix without validation.

Still, that’s part of the fun. Break things. Learn. Iterate.

What’s Next?

There’s tons of potential here:

- Natural language explanations of findings.

- GitHub bot integration.

- Real-time scan hooks on commits.

But even without all that, this tool already helps cut through clutter and surface real issues—before they go live.

It’s not a replacement for manual reviews or pen tests. But it reduces the burden, especially in fast-moving projects.

Conclusion

If you work with Python apps—or maintain internal tools—you should try this hybrid approach.

- CodeQL gives you precision.

- AI gives you context and adaptability.

Together? It’s a scanner that grows smarter over time.

You don’t need to be a security pro or machine learning expert to build something like this. Just a bit of curiosity. And maybe a few weekends.

Hope this sparked a few ideas. Or at least made security feel a little less painful and a little more powerful.

Read our more blogs-Creating a Real-Time Groundwater Level Monitor with IoT and Grafana